Peter J. Sucy's MyP.O.D. Reclining Workstation is now The Zero Gravity Work Pod.

A Kickstarter Project has been started to fund the development of a prototype of the reclining desk design. The campaign's goal is just $2400 to be used to purchase a refurbished Perfect Chair (~$1500) plus materials, and hardware to complete a prototype.

This step, Phase II, will allow me and manufacturer to refine and custom tailor the desk to the most popular zero gravity recliner on the market, the Perfect Chair. Our plan is to build in enough adjustment accommodate many other recliners.

Link to Kickstarter Project:

Monday, November 11, 2013

Wednesday, June 24, 2009

What are Algorithmically Synthesized Digital Paintings?

ASDP is simply one of the terms I use to describe my digital painting technique. A couple of others are photo-synthesis, graphic interpretations, and micro-painting.

I use a unique program called a paint synthesizer. Also known as Studio Artist 3.0, the program allows you to selecta source photograph and have it re-rendered to a canvas (paint window). Just like a music synthesizer has patches to make your notes sound like a wide variety of instruments, SA3 has patches that can make your original photo look like a pencil sketch, a watercolor, oil painting or any of thousands of other natural media presets that are included with the program.

SA3 lets you build and blend layers of these renderings within the program, although I prefer to save the individual renderings out as files which I then load into Photoshop. I have about a dozen or so patches that I've found work well to create the styles of painting I like to use. There is one patch that creates a soft, blurry watercolor wash that I tighten up with a very small soft brush. A rough circular brush cloner that gives me a rough brush texture that I can modify with PS filters. A very small brush cloner to provide detail. A hard edged small square point detail color sketcher which adds some variation and detail to the images. A pencil outliner and a lum hatch sketcher to provide some edges and extra detail to shadows round out the primary ones I tend to favor. I've yet to explore all the thousands of other patches available but I try to sample a few new ones every now and then.

After rendering out these layers I import them into PS and begin rebuilding the image from these layers. Mind you most of these layers only vagely resemble the original photo but as I begin with the watercolor wash as the background layer and begin to add the other layers selecting different blending styles for each layer. Darken for some layers, lighten for others, color burn often for the edge layers. One trick I often use is to import the original image as a layer and apply the Stylize-Find Edges filter on it to create nice pencil or highlight layers (by inverting and using the color dodge or screen blend option).

Every image is different and how I approach it depends on the look I wish to acheive. By playing with the layer order, blending style and level of transparency I can acheive remarkable control over the look of the final image. Highlights can have one type of brush stroke, shadows another. Layers can be used to lighten areas and combined with layer masks. Individual layers can have filters applied to them or curves to provide even more control. I often sharpen the layers individually so I can use different radii on the unsharp mask filter to accentuate certain brush strokes.

I've found the technique works most successfully on my nature abstracts and find it helps to further abstract the subject and remove the initial focus on the details. What I have found amazing is actually how much tonal detail is still preserved in spite of the micro-painting process.

I use a unique program called a paint synthesizer. Also known as Studio Artist 3.0, the program allows you to selecta source photograph and have it re-rendered to a canvas (paint window). Just like a music synthesizer has patches to make your notes sound like a wide variety of instruments, SA3 has patches that can make your original photo look like a pencil sketch, a watercolor, oil painting or any of thousands of other natural media presets that are included with the program.

SA3 lets you build and blend layers of these renderings within the program, although I prefer to save the individual renderings out as files which I then load into Photoshop. I have about a dozen or so patches that I've found work well to create the styles of painting I like to use. There is one patch that creates a soft, blurry watercolor wash that I tighten up with a very small soft brush. A rough circular brush cloner that gives me a rough brush texture that I can modify with PS filters. A very small brush cloner to provide detail. A hard edged small square point detail color sketcher which adds some variation and detail to the images. A pencil outliner and a lum hatch sketcher to provide some edges and extra detail to shadows round out the primary ones I tend to favor. I've yet to explore all the thousands of other patches available but I try to sample a few new ones every now and then.

After rendering out these layers I import them into PS and begin rebuilding the image from these layers. Mind you most of these layers only vagely resemble the original photo but as I begin with the watercolor wash as the background layer and begin to add the other layers selecting different blending styles for each layer. Darken for some layers, lighten for others, color burn often for the edge layers. One trick I often use is to import the original image as a layer and apply the Stylize-Find Edges filter on it to create nice pencil or highlight layers (by inverting and using the color dodge or screen blend option).

Every image is different and how I approach it depends on the look I wish to acheive. By playing with the layer order, blending style and level of transparency I can acheive remarkable control over the look of the final image. Highlights can have one type of brush stroke, shadows another. Layers can be used to lighten areas and combined with layer masks. Individual layers can have filters applied to them or curves to provide even more control. I often sharpen the layers individually so I can use different radii on the unsharp mask filter to accentuate certain brush strokes.

I've found the technique works most successfully on my nature abstracts and find it helps to further abstract the subject and remove the initial focus on the details. What I have found amazing is actually how much tonal detail is still preserved in spite of the micro-painting process.

Thursday, May 28, 2009

Why I Photograph Rocks.

You may have noticed, if you’ve viewed my photographic portfolio, that I like to photograph rocks... well rocks and plants actually. There’s no mystery in why this is so. It's because I’m basically lazy and these are the two most plentiful types of objects on the earth. These two subjects alone offer me a myriad of forms, colors, textures, and shapes with which to play.

My earliest photographic vacations were in Maine and I became fascinated with the variety of rocks across the state. Multicolored striated layers near Pemaquid, pink granite around Acadia, gray granite in the Western mountains but also a mix of different types of boulders deposited throughout each of these areas. These offered some beautiful contrasts with the native rocks.

The rocks of the Maine coast are some of the oldest on the planet. They exhibit the deformations and scars of their long journey through time. Mostly volcanic in origin, compressed, deformed and transformed by tectonic pressures these rocks were more recently scoured by receding glaciers leaving the bedrock exposed to the further forces of erosion by wind and water. In places you can find layers of rock that have been folded back on themselves creating very unique striated forms.

My trip to Hawaii in 1998 allowed me to go back to the beginning of the cycle and see the creation of new surface features via the deposition of ash and the expulsion of lava. Most fascinating were my trips into the Kiluea and Haleakala craters, here I found all sorts of new forms, textures and colors to interact with. These landscapes were of another world and I really felt like I was on another planet as I descended into Haleakala.

I’m really looking forward to an upcoming trip back to the big island of Hawaii and especially an opportunity to explore Kauai for the first time. That trip will take me to a much older land than that on Maui which has been further transformed by erosion. I anticipate coming home with a few good rock images.

My earliest photographic vacations were in Maine and I became fascinated with the variety of rocks across the state. Multicolored striated layers near Pemaquid, pink granite around Acadia, gray granite in the Western mountains but also a mix of different types of boulders deposited throughout each of these areas. These offered some beautiful contrasts with the native rocks.

The rocks of the Maine coast are some of the oldest on the planet. They exhibit the deformations and scars of their long journey through time. Mostly volcanic in origin, compressed, deformed and transformed by tectonic pressures these rocks were more recently scoured by receding glaciers leaving the bedrock exposed to the further forces of erosion by wind and water. In places you can find layers of rock that have been folded back on themselves creating very unique striated forms.

My trip to Hawaii in 1998 allowed me to go back to the beginning of the cycle and see the creation of new surface features via the deposition of ash and the expulsion of lava. Most fascinating were my trips into the Kiluea and Haleakala craters, here I found all sorts of new forms, textures and colors to interact with. These landscapes were of another world and I really felt like I was on another planet as I descended into Haleakala.

I’m really looking forward to an upcoming trip back to the big island of Hawaii and especially an opportunity to explore Kauai for the first time. That trip will take me to a much older land than that on Maui which has been further transformed by erosion. I anticipate coming home with a few good rock images.

Friday, February 6, 2009

Reflecting on Twenty-Five Years with the Macintosh

The Mac Released My Inner Artist.

This year, 2009 marks the 25th anniversary of the Macintosh and June the 25th anniversary of the purchase of my first computer, a Macintosh 128K. It would turn out to be one of the most significant decisions of my career, perhaps my life. I won’t retell the whole story here, you can read more about it in My Personal Digital History on my website. Suffice it to say that the computer and specifically the innovative Macintosh enabled me for the first time to effectively translate images in my head to images on paper.

From a very early age I think I aspired to be an artist of some kind. I discovered in the first grade however, that just writing with a pencil was painful. I dreaded the daily writing exercises, my hands would cramp up after a few lines. I wasn’t able to control the amount of pressure I was applying to the pencil and constantly broke the tips. I would complain but was soon “convinced” by the nuns that it must be all in my mind since no one else was had trouble.

I took some art classes in junior high but became frustrated at how difficult it was to get my hands to do what I wanted as they quickly fatigued from just holding a brush. In high school I tried drafting but that too became too tedious and frustrating as I spent more time erasing than drawing. Finally, by the end of high school I discovered filmmaking and animation which didn’t demand superb manual dexterity and endurance to become skilled image maker. While studying film at RIT I also discovered I also enjoyed creating images with a still camera. Since it was much less expensive than filmmaking I decided that it would be my primary medium.

After RIT I worked as a photographic test technician for Kodak at night and pursued my free-lance fine art photography/color printing business during the day. I bought the Mac ostensibly to create better looking documents (letters, invoices, receipts, print labels, etc.) for my free-lance business but soon discovered it might have lots of other applications.

My entry level position with Kodak as a photo technician was primarily click and wind. We had to actuate (wind and trip -10) cameras and then write test reports in triplicate with pen, with no mistakes, or start over. Five years of these activities were torture to the point I could barely hang on to a pen, let alone write my report, at the end of my shift. I would have to take breaks every hour or so to go to the restroom and soak my hands in hot water until I could move them again.

At any rate, I realized that the Mac could eliminate the hand written reports by using text entry software, database software and the reports could even be distributed over the brand new networks that were starting to appear. So I suggested with a report I created on my Mac that they automate data entry and collection in the lab.

The Macintosh and especially the ingenious mouse finally gave me the ability to draw without pain. The mouse was much easier to hold than a pen or brush and the Undo command gave me the ability to correct mistakes (and random muscle twitches) without defacing or tearing up the paper with an eraser.

So from MacPaint and MacDraw, I progressed to Illustrator, Photoshop, Strata3D and finally Poser. Now nearly two decades since I first beta tested those programs I’m still using those same four apps today. Photography is still a part of my creative process but real world photography less and less as my mobility declines. Fortunately, the computer has given rise to virtual photography as my alternative.

This year, 2009 marks the 25th anniversary of the Macintosh and June the 25th anniversary of the purchase of my first computer, a Macintosh 128K. It would turn out to be one of the most significant decisions of my career, perhaps my life. I won’t retell the whole story here, you can read more about it in My Personal Digital History on my website. Suffice it to say that the computer and specifically the innovative Macintosh enabled me for the first time to effectively translate images in my head to images on paper.

From a very early age I think I aspired to be an artist of some kind. I discovered in the first grade however, that just writing with a pencil was painful. I dreaded the daily writing exercises, my hands would cramp up after a few lines. I wasn’t able to control the amount of pressure I was applying to the pencil and constantly broke the tips. I would complain but was soon “convinced” by the nuns that it must be all in my mind since no one else was had trouble.

I took some art classes in junior high but became frustrated at how difficult it was to get my hands to do what I wanted as they quickly fatigued from just holding a brush. In high school I tried drafting but that too became too tedious and frustrating as I spent more time erasing than drawing. Finally, by the end of high school I discovered filmmaking and animation which didn’t demand superb manual dexterity and endurance to become skilled image maker. While studying film at RIT I also discovered I also enjoyed creating images with a still camera. Since it was much less expensive than filmmaking I decided that it would be my primary medium.

After RIT I worked as a photographic test technician for Kodak at night and pursued my free-lance fine art photography/color printing business during the day. I bought the Mac ostensibly to create better looking documents (letters, invoices, receipts, print labels, etc.) for my free-lance business but soon discovered it might have lots of other applications.

My entry level position with Kodak as a photo technician was primarily click and wind. We had to actuate (wind and trip -10) cameras and then write test reports in triplicate with pen, with no mistakes, or start over. Five years of these activities were torture to the point I could barely hang on to a pen, let alone write my report, at the end of my shift. I would have to take breaks every hour or so to go to the restroom and soak my hands in hot water until I could move them again.

At any rate, I realized that the Mac could eliminate the hand written reports by using text entry software, database software and the reports could even be distributed over the brand new networks that were starting to appear. So I suggested with a report I created on my Mac that they automate data entry and collection in the lab.

The Macintosh and especially the ingenious mouse finally gave me the ability to draw without pain. The mouse was much easier to hold than a pen or brush and the Undo command gave me the ability to correct mistakes (and random muscle twitches) without defacing or tearing up the paper with an eraser.

So from MacPaint and MacDraw, I progressed to Illustrator, Photoshop, Strata3D and finally Poser. Now nearly two decades since I first beta tested those programs I’m still using those same four apps today. Photography is still a part of my creative process but real world photography less and less as my mobility declines. Fortunately, the computer has given rise to virtual photography as my alternative.

Labels:

draw,

fibromyalgia,

mac,

paint,

twenty-five,

years

Monday, December 8, 2008

How Does Lenticular Printing Work?

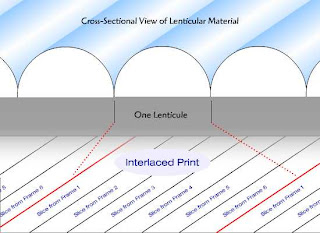

A Very Simplified Illustration - The cyan stripes represent View 1 and the magenta stripes View 2. Most lenticular prints are made from anywhere between 10 - 30 views. (It was too much work to make even 10 stripes per lenticule)

A Very Simplified Illustration - The cyan stripes represent View 1 and the magenta stripes View 2. Most lenticular prints are made from anywhere between 10 - 30 views. (It was too much work to make even 10 stripes per lenticule)Traditional stereo viewers work by displaying two different perspectives of a scene, usually taken by a stereo camera with two lenses an inch or so apart, just like your eyes. When viewed with a stereo viewer each eye sees the scene from a slightly different perspective and just as we view scenes in real life, the scene is perceived as having 3 dimensionality.

Lenticular prints work by taking a series of captured or rendered views (usually between 8 and 24) and interlacing them to form one very high resolution image. The interlacing software takes the multiple camera views (or frames) and slices them up into many vertical strips and then basically collates these strips putting one strip from each view under each lenticule (see image above). When you view the final print the lenses will present each eye with a different view creating a 3D image.

3D is just one of the effects possible with lenticular. If you rotate the lenticular sheet so that the lenticules run horizontally you can use it to make animated hand held cards. Some of the animation effects possible are a 2 or 3 image flip, zooms, morphs and very short animations with limited motion.

Some combinations of effects are also possible, for example 3D with a flip or animations of color and sometimes small motions. Personally, I've found that motion is often too distracting on a 3D image.

What is 3D lenticular imaging?

Three-D Lenticular Imaging is a 2D printing process for creating auto-stereographic 3D prints and transparencies. An auto-stereographic 3D print does not require 3D glasses, stereo viewers or any other aids to view an image in 3D.

Three-D Lenticular Imaging is a 2D printing process for creating auto-stereographic 3D prints and transparencies. An auto-stereographic 3D print does not require 3D glasses, stereo viewers or any other aids to view an image in 3D.Lenticular technology in effect, puts the glasses on the print instead. The image is often printed on paper and then laminated with a sheet of lenticular material which is extruded, embossed or engraved with a series of vertically aligned, plano-convex, cylindrical lenses called lenticules.

The image can also be printed directly onto the plastic lens material. This is the method used by most commercial printers because it eliminates the laminating (and manually aligning) step which is very labor intensive. Lenticular prints can be created in small quantities on an inkjet printer, photographically, or in large volumes using presses that print direct to the lens material.

These lens sheets are available in a number of different lens pitches. Lens pitch is the number of lenticules per inch a sheet has. Typically, they range from 15 lpi up to 200 lpi or more. Generally, the lower the pitch the further away the optimum viewing distance. Large low pitch sheets (thicker, less flexible material) are used for very large displays and the higher pitched (thinner, more flexible material) sheets are for items like postcards and business cards. I read in an industry trade mag that some billboard-sized lenticulars have used lenses with lenticules that are 1 or 2 inches wide each.

Surprisingly, lenticular technology has been around since the early 1900’s. It was originally used in several color processes including Technicolor and Kodachrome film. Thanks to computer and digital imaging technologies developed in the 1990’s it has made a big comeback. These new technologies have made it possible to create stunning, high resolution, high quality 3D images.

The technique I developed ten years ago was a simple method of turning 3D scenes I had created in Strata 3D into a series of images for interlacing. The process is the same regardless of which 3D application you use as long as you can animate the camera.

Out of necessity I had to learn how to make my own lenticulars. It can be done, but you need to find some interlacing software (a specialized program like this can be quite expensive, the one I use is $2500, it also does 2D to 3D conversion, but there are other interlacer only versions that start at around $100), a high resolution printer (I use an Epson R220 and 3800), a cold laminator and 3D lenticular lens material and an optically clear adhesive (you can buy sheets with this adhesive already applied).

The process is time consuming (so you’d never want to do large quantities this way). I only use it for prototyping, one-offs and some portfolio samples. It requires quite a bit of skill to align the lens with the print and then get it through the laminator while still keeping it aligned (my success rate is only about 1 in 3). It’s really so much easier to let a professional printer deal with the technical details of lenticular printing.

Next time: How Does it Work?

Labels:

3d,

art,

lenticular,

photography,

printing,

stereo,

virtual

What is Virtual Photography?

by Peter J. Sucy

Most likely I’m not the first to have coined this term, but I’ll attempt to explain what I believe it is and why I’ve chosen it as my primary medium. For you photographers out there, virtual photography offers a photographic tool set unlike any you would ever find in the real world.

Imagine you had a studio where the laws of physics don’t apply. In this studio your camera does not need a tripod and is not affected by gravity. If you put your camera in a spot it will stay there, no one can accidently bump into it except maybe you.

Now imagine that the camera has a zoom lens with a range of 5mm to 1600mm. The f-stop is and shutter speeds are adjustable but every image can be properly exposed no matter what the settings. There is a switch to turn off depth of field so everything is in focus at any f-stop. This camera can even be programmed to follow a path over time, moving through space by itself and recording frames (at a rate selected by you) as it goes. Just about every setting can be manipulated over time as well so you can zoom and pull focus while moving for example.

Your lighting system is even more magical, your lights can be placed anywhere you need them and they won’t show up in your scene, except by their illumination, no potentially dangerous and unsightly light stands and cords. You can change a light from a variable angle spotlight, to an immense soft box, to a point source (great for getting light into those hard to reach places) or even an infinite source like the sun. Every light’s brightness and color can easily be set with a few dials, Each light can have it’s shadows turned on or off, made soft or sharp and even apply gels and effects like mist and fog. The spotlights can even be configured to automatically point at and follow any object in the scene as it moves.

Now here is where it really begins to get exciting. Imagine you could buy a highly detailed set like a city block consisting of several buildings, a street and an alleyway, or a futuristic sci-fi set of a spaceship interior for under $30 and have them delivered to you in minutes without ever leaving your chair.

The same goes for actors/models, backdrops, scenery, costumes, props, and even lights. There are numerous online establishments that provide everything you could imagine for your magical studio. Numerous male and female characters, clothing and period costume, sets, props and more to create your own virtual visions.

These actors are extremely cooperative, posing just the way you want them and holding perfectly still for as long as you want. You can buy libraries of pre-set poses and change the actors pose with a click of a button. Move your timeline out a few seconds and select a different pose and you have just created an animation of the character moving from one pose the other over the time you set on the timeline.

Once you’ve orchestrated your set, the lighting, the actors and framed your scene with your camera you are ready to take the picture. You can choose to produce a single frame or create an entire movie.

Is this some future speculation? No, you can have your own virtual studio today. All you need is a 3D modeling/rendering software program for your PC like 3DMax, Poser, Strata3D, Bryce, Vue or numerous others that are available. Many offer free trials and there are even free 3D programs available. Daz3D (one of the larger content providers) offers their Daz Studio software free for the download (they want you to buy their content of course).

Content Paradise another online 3D content provider is currently offering the previous version of Poser (a popular 3D studio program designed primarily for human figure models) for $29.00 or the current version for only $99, both great bargains. The programs I mentioned also come with quite of content built in so there are figures and props to work with right out of the “box”.

Renderosity is probably the largest content provider with hundreds of artists providing thousands of models and textures for you to buy and download. All of these sites serve as communities for thousands of artists and many offer online tutorials and forums where you can ask questions and get answers from some very talented artists. There are also user galleries where you can post your work for all to see and comment on.

My 3D work became much more productive when I discovered these communities and content providers. Many of my early 3D scenes took months and a few even years because I had to build and texture all my own models. Now a days I can usually complete an entire 3d scene in less than a week because I can buy most of the models I need and re-texture them if needed. The entire set and prop budget for many scenes is usually well under $100.

I’ve found that my field photography has also much improved with the experience gained in the virtual studio. So if you’re looking for something to do when your stuck at home consider getting yourself a virtual studio. If you already have the computer it’s pretty cheap to get into as long as you avoid becoming addicted to buying new content.

Most likely I’m not the first to have coined this term, but I’ll attempt to explain what I believe it is and why I’ve chosen it as my primary medium. For you photographers out there, virtual photography offers a photographic tool set unlike any you would ever find in the real world.

Imagine you had a studio where the laws of physics don’t apply. In this studio your camera does not need a tripod and is not affected by gravity. If you put your camera in a spot it will stay there, no one can accidently bump into it except maybe you.

Now imagine that the camera has a zoom lens with a range of 5mm to 1600mm. The f-stop is and shutter speeds are adjustable but every image can be properly exposed no matter what the settings. There is a switch to turn off depth of field so everything is in focus at any f-stop. This camera can even be programmed to follow a path over time, moving through space by itself and recording frames (at a rate selected by you) as it goes. Just about every setting can be manipulated over time as well so you can zoom and pull focus while moving for example.

Your lighting system is even more magical, your lights can be placed anywhere you need them and they won’t show up in your scene, except by their illumination, no potentially dangerous and unsightly light stands and cords. You can change a light from a variable angle spotlight, to an immense soft box, to a point source (great for getting light into those hard to reach places) or even an infinite source like the sun. Every light’s brightness and color can easily be set with a few dials, Each light can have it’s shadows turned on or off, made soft or sharp and even apply gels and effects like mist and fog. The spotlights can even be configured to automatically point at and follow any object in the scene as it moves.

Now here is where it really begins to get exciting. Imagine you could buy a highly detailed set like a city block consisting of several buildings, a street and an alleyway, or a futuristic sci-fi set of a spaceship interior for under $30 and have them delivered to you in minutes without ever leaving your chair.

The same goes for actors/models, backdrops, scenery, costumes, props, and even lights. There are numerous online establishments that provide everything you could imagine for your magical studio. Numerous male and female characters, clothing and period costume, sets, props and more to create your own virtual visions.

These actors are extremely cooperative, posing just the way you want them and holding perfectly still for as long as you want. You can buy libraries of pre-set poses and change the actors pose with a click of a button. Move your timeline out a few seconds and select a different pose and you have just created an animation of the character moving from one pose the other over the time you set on the timeline.

Once you’ve orchestrated your set, the lighting, the actors and framed your scene with your camera you are ready to take the picture. You can choose to produce a single frame or create an entire movie.

Is this some future speculation? No, you can have your own virtual studio today. All you need is a 3D modeling/rendering software program for your PC like 3DMax, Poser, Strata3D, Bryce, Vue or numerous others that are available. Many offer free trials and there are even free 3D programs available. Daz3D (one of the larger content providers) offers their Daz Studio software free for the download (they want you to buy their content of course).

Content Paradise another online 3D content provider is currently offering the previous version of Poser (a popular 3D studio program designed primarily for human figure models) for $29.00 or the current version for only $99, both great bargains. The programs I mentioned also come with quite of content built in so there are figures and props to work with right out of the “box”.

Renderosity is probably the largest content provider with hundreds of artists providing thousands of models and textures for you to buy and download. All of these sites serve as communities for thousands of artists and many offer online tutorials and forums where you can ask questions and get answers from some very talented artists. There are also user galleries where you can post your work for all to see and comment on.

My 3D work became much more productive when I discovered these communities and content providers. Many of my early 3D scenes took months and a few even years because I had to build and texture all my own models. Now a days I can usually complete an entire 3d scene in less than a week because I can buy most of the models I need and re-texture them if needed. The entire set and prop budget for many scenes is usually well under $100.

I’ve found that my field photography has also much improved with the experience gained in the virtual studio. So if you’re looking for something to do when your stuck at home consider getting yourself a virtual studio. If you already have the computer it’s pretty cheap to get into as long as you avoid becoming addicted to buying new content.

Subscribe to:

Comments (Atom)